|

Welcome to ShortScience.org! |

|

- ShortScience.org is a platform for post-publication discussion aiming to improve accessibility and reproducibility of research ideas.

- The website has 1583 public summaries, mostly in machine learning, written by the community and organized by paper, conference, and year.

- Reading summaries of papers is useful to obtain the perspective and insight of another reader, why they liked or disliked it, and their attempt to demystify complicated sections.

- Also, writing summaries is a good exercise to understand the content of a paper because you are forced to challenge your assumptions when explaining it.

- Finally, you can keep up to date with the flood of research by reading the latest summaries on our Twitter and Facebook pages.

On Using Very Large Target Vocabulary for Neural Machine Translation

Association for Computational Linguistics - 2015 via Local Bibsonomy

Keywords: dblp

Association for Computational Linguistics - 2015 via Local Bibsonomy

Keywords: dblp

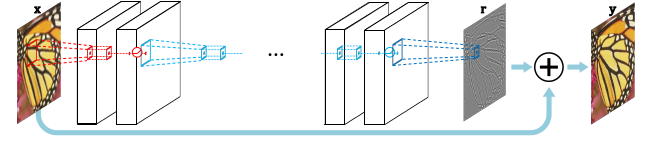

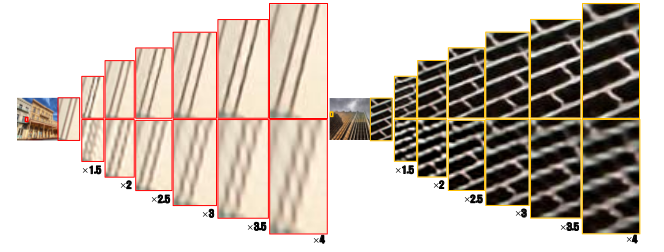

Accurate Image Super-Resolution Using Very Deep Convolutional Networks

Conference and Computer Vision and Pattern Recognition - 2016 via Local Bibsonomy

Keywords: dblp

Conference and Computer Vision and Pattern Recognition - 2016 via Local Bibsonomy

Keywords: dblp

Training Confidence-calibrated Classifiers for Detecting Out-of-Distribution Samples

International Conference on Learning Representations - 2018 via Local Bibsonomy

Keywords: dblp

International Conference on Learning Representations - 2018 via Local Bibsonomy

Keywords: dblp

Generating Natural Adversarial Examples

International Conference on Learning Representations - 2018 via Local Bibsonomy

Keywords: dblp

International Conference on Learning Representations - 2018 via Local Bibsonomy

Keywords: dblp

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

International Conference on Machine Learning - 2015 via Local Bibsonomy

Keywords: dblp

International Conference on Machine Learning - 2015 via Local Bibsonomy

Keywords: dblp